retrain-pipelines and the almighty function-caller

Recently, the trend of models that "resonate" (a.k.a. test-time compute - https://openai.com/index/learning-to-reason-with-llms/) has invaded the news and got to the top of the hype wave. These are generalist and omni-capable models that consume and produce numerous tokens during internal reasoning loops before generating and exposing an actual response. thinking..., wait, maybe..., thinking..., reformulating..., considering a different angle... etc.

Syntonically, the emergence and generalization of agentic systems, systems that require a set of subdivided tasks to be executed in sequence, is a field where calls to LLMs are often chained and numerous for each request processed by such a system. The relevance of those superpowerful models in terms of their efficiency can be questioned under such conditions.

In this context, having the option to use a smaller model that can be adapted to each of the different tasks of an agentic system is appealing. The specialization of a "base" (pretrained) model for a given task typically occurs through supervised fine-tuning. This step involves refining an existing model that has already learned language patterns and rules. This additional training translates into specialization for a given task through examples of "request" and "expected results."

A "base" model becomes fine-tuned to become a specialist in a task. A common example is the "instruct" version, which specializes in chat/discussion, following instructions, and responding to user requests in free text form : "Hello" => "Hello, how can I assist you?".

Thanks to a method introduced in the arXiv paper 2106.09685 "LoRA: Low-Rank Adaptation of Large Language Models" from June 2021, the specialization of a "base" model for a task through supervised fine-tuning can be done at reduced cost and relatively quickly. Other advantages of the supervised fine-tuning method via LoRa adapters include:

- They're pluggable, i.e. the weights of the "base" model are not altered.

- Therefore, several LoRa adapters can be created for the same "base" model: one per task requiring specialization.

- Therefore, several specialist models can be employed without surging the hardware capacity requirements of the server (mainly without compounding the constraints on available GPU vRAM but only slightly increasing it).

State of agentic function-calling

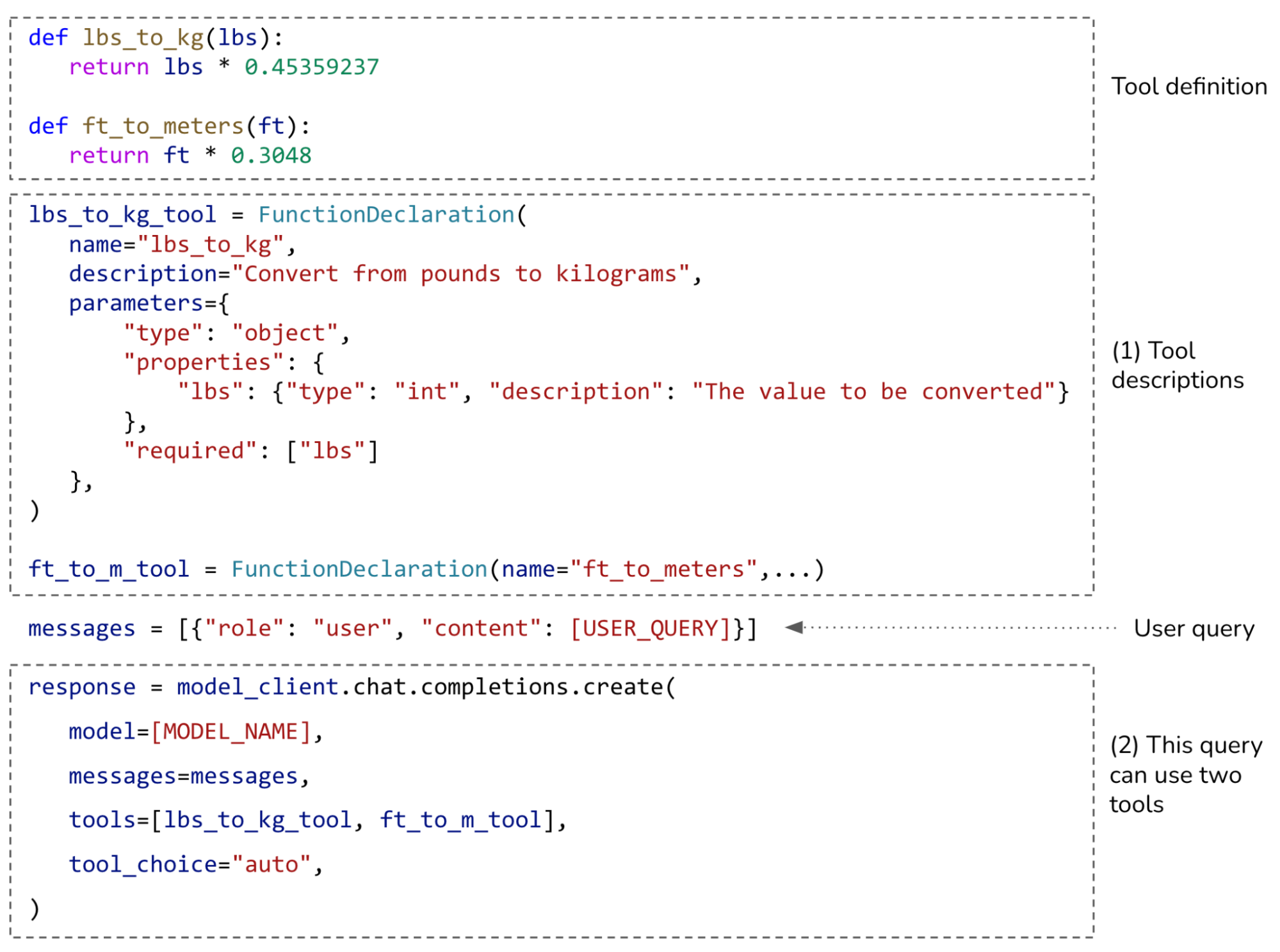

- "User query" =>

- "User query" + a set of definitions of accessible tools as context (generally around half a dozen or less) =>

- LLM + constrained generation (e.g. via pydantic typing or grammar) =>

- Set of actionable tool-call commands =>

- Code interpreter (can be in Python) =>

- Tool calls (can be API calls, from third-party services or for your company's tech teams) =>

- User query + tool-call responses as context =>

- LLM =>

- Response to user

The boom of Model Context Protocols (MCPs) (anthropic.com/news/model-context-protocol) within LLM user circles does not change this situation, since these MCP servers are an abstraction between LLMs and tools. For each MCP server it is "connected" to (via an orchestrator, but let's not let ourselves get distracted here), an LLM continues to receive a set of tool definitions and a query to which it must respond with actionable function calls. A sort of zero-shot prompting, where the context explodes rapidly with the number of MCPs (and their respective many tools) made available to the LLM.

A function-calling LoRa adapter with tools memory

In a professional context on production systems, retraining such a "function-caller specialist adapter" can be justified by the depreciation of a set of tools that become unavailable or by updates to certain tools that imply a change in their definition. It remains possible, between retrainings, to make new tools available to the LLM in the "classic" way of extending the context, which is the norm today.

The rest of this article covers the retraining of a model to confer plug-and-play specialization in function-calling (from knowledge, not passed-on tools context), as well as the delivery of a multi-LoRa inference server, one server that allows switching between specializations from a single "base" model in vRAM.

Other "classic" LoRa adapters that could be deployed in this multi-adapters inference server for instance are of the type "instruct", "query router", "response quality evaluator", "responses aggregator", etc. (@see that blogpost for more inspo on that : anthropic.com/engineering/building-effective-agents). Together, they would form a team of specialists serving distinct functions of the same agent system on a frugal infrastructure. If the "base" model is also "small," as is the case in our example here, then this constellation of agents can be deployed "on edge" for self-hosted locally running systems.

The retrain-pipelines/func_calls_ds training dataset

retrain-pipelines/function_caller_lora model repo here on the Hub.

The first steps consist in creating a two-configs dataset from 2 source datasets :

-

The 2 source datasets :

- function calls : it can be any generic dataset for function calling.

- generic user-queries : it can be any user question/answers dataset, any that isn't related to function calling at all.

- The generated training dataset (with its 2 configs) :

continued_pre_trainingsupervised_finetuning(with splits for training and evaluation)

The goal indeed is twofold :

- grow the knowledge of the "base" model with a bank of tools. We do that through Continued pretraining and specifically the

embed_tokensandlm_headso called saved modules that we couple with the LoRa adapter (more on this in the next section). - train a LoRa adapter specialized in actionable tool-calls that doesn't hallucinate. So we train on actual "query => tool-calls" pair, but also on crafted pairs of "query => no tool-call".

function-calling CPT & SFT training dataset

function-calling CPT & SFT training dataset

Moving along in this article, we're using Salesforce/xlam-function-calling-60k (26d14eb) as the function-calls source dataset and lighteval/natural_questions_clean (a72f7fa) as the generic user-queries source dataset. This leads to retrain-pipelines/func_calls_ds v0.28 with a knowledge bank of 4,200+ tools.

The retrain-pipelines/func_caller_lora model adapter

CPT & SFT adapter training tasks.The resulting trained adapter version has both

saved_modules (on the `embed_tokens` and `lm_head` layers) and LoRas (on the `q_proj`, `k_proj`, `v_proj`, `o_proj`, `gate_proj`, `up_proj` and `down_proj` layers).

base LLM + an on/off knowledge-enhanced task-expert adapter

base LLM + an on/off knowledge-enhanced task-expert adapter

As eluded to here above, this adapter is pluggable on a base model, bringing task-specific expertise to the latter. In our case : function-calling from an extensive intrinsic knowledge bank of tools.

We then evaluate our adapter's performance on the Jaccard index, also known as IoU (Intersection over Union) for each evaluation record. So to penalize on both any "missed tool call" and any "illegitimate (or incorrectly formatted) tool call", given a user query. Note that we purposely do not rely on any extra output-constraining mechanism some third parties provide because they're overkill with the performance even small models provide today.

Lets look into the example of v0.29 of retrain-pipelines/function_caller_lora, which takes unsloth/Qwen2.5-1.5B as a base model. The below figure is an overview of the performance of this base model + our extra-knowledge expert funtion-calling adapter. It shows twice the same data presented with two different groupings :

Evaluation performance chart

Evaluation performance chart

As can be seen on that figure, our model adapter marks an almost perfect score on not calling any tool when the user query doesn't require any. It does pretty well when a single tool-call is to be made too, whith 75% of the ~5,700 records from the evaluation set falling into that category. It does relatively homogeneously good for up to 9 tool calls for a query. That's quite remarkable.

It's important to note that for a tool-call to be considered valid in the above performance computation, for now, we only go the elementary naïve way and the assessed performance measure certainly is quite deceptive (click arrows to expand items) :

-

we, for instance, do not check for valid but different order of appearance of named parameters in inferred function-calls :

query Fetch three chess puzzles with a rating of 1500, focusing on the 'kingsideAttack' theme, and ensure they are from the 'Kings_Gambit_Accepted' opening family. Also, specify that the puzzles should have exactly 5 moves. answer [{"name": "advanced", "arguments": {"number_of_puzzles": 3, "themes": "kingsideAttack", "rating": "1500", "opening_family": "Kings_Gambit_Accepted", "number_of_moves": 5}}] completion [{"name": "advanced", "arguments": {"number_of_puzzles": 3, "rating": "1500", "themes": "kingsideAttack", "number_of_moves": 5, "opening_family": "Kings_Gambit_Accepted"}}] -

we do not use an arithmetic evaluator to check if two writings account for the same value when a parameter is numerical :

query Project the growth of an investment over 10 years, with an initial investment of $1000, an annual addition of $500, an annual return rate of 7%, and an inflation rate of 2% for the first 5 years and 3% for the next 5 years. answer [{"name": "project_investment_growth", "arguments": {"principal": 1000, "annual_addition": 500, "years": 10, "return_rate": 0.07, "inflation": [0.02, 0.02, 0.02, 0.02, 0.02, 0.03, 0.03, 0.03, 0.03, 0.03]}}] completion [{"name": "project_investment_growth", "arguments": {"principal": 1000, "annual_addition": 500, "years": 10, "return_rate": 0.07, "inflation": "[0.02] * 5 + [0.03] * 5"}}] -

we do not acknowledge when default params are explicitly mentioned in inference but not ground-truth (and vice-versa) :

query Fetch the awards summary for the actor with ID 'nm0000243' and also get the top headlines in the technology category for the US in English. answer [{"name": "actors_get_awards_summary", "arguments": {"nconst": "nm0000243"}}, {"name": "top_headlines", "arguments": {"category": "technology", "country": "US"}}] completion [{"name": "actors_get_awards_summary", "arguments": {"nconst": "nm0000243"}}, {"name": "top_headlines", "arguments": {"language": "en", "category": "technology", "country": "US"}}] -

there are records for which the two values differ but both are perfectly valid :

query I'm planning a picnic at Royal Botanic Gardens in Sydney. Are there any schools around the park? answer [{"name": "schools_list", "arguments": {"lat": -33.864108, "lon": 151.217824}}] completion [{"name": "schools_list", "arguments": {"lat": -33.8688, "lon": 151.2093}}] -

there also are records for which there exist more than one tool in the learned knowledge-bank which can make the job :

query Get auto-complete suggestions for 'Apple' in English for stocks and futures. answer [{"name": "auto_complete_deprecated", "arguments": {"text": "Apple", "type": "stock,futures"}}] completion [{"name": "v2_auto_complete", "arguments": {"text": "Apple", "search_type": "stocks_futures"}}] -

it's sometimes just impossible to get the answer right for lack of derivable information, i.e. when the ground-truth answer contains information not present in the query :

query Create a radio playlist for the song 'Bohemian Rhapsody' by Queen. answer [{"name": "get_radio_playlist", "arguments": {"uri": "spotify:track:4u7DsyG53mBfR9WP25O6uG"}}] completion [{"name": "get_radio_playlist", "arguments": {"uri": "spotify:track:3wZkxCWeKu0J5tJ5tJ5tJ5tJ5tJ5tJ5tJ5t -

the model on occasions performs better than the validation record, which sometimes just has an incorrect ground-truth :

query Display the first 50 product reviews for a product with 'def456' as the 'product_id' in 'AU' using the 'en' language, without any rating filter. answer [{"name": "product_reviews", "arguments": {"product_id": "def456", "country": "AU", "language": "en", "limit": "50"}}] completion [{"name": "product_reviews", "arguments": {"product_id": "def456", "country": "AU", "language": "en", "limit": 50}}] query Search for music related to 'The Beatles' and provide the download link for an Instagram reel by 'Emma Watson'. answer [{"name": "music_search", "arguments": {"query": "The Beatles"}}, {"name": "get_media_download_link", "arguments": {"link": "https://www.instagram.com/reel/Bxxxxx/"}}] completion [{"name": "music_search", "arguments": {"query": "The Beatles"}}, {"name": "get_media_download_link", "arguments": {"link": "https://www.instagram.com/reel/Emma Watson"}}] -

and the list goes on.

Recall that we only submit user queries and the model picks-up from his intrinsic knowledge-bank of 4,200+ available tools to generate actionable tool-calls, without the usual extended-context arsenal. Quite a different scenario than the current state of function-calling. And yet, it makes wonders !

Imagine plethora of MCP servers connected to your local agentic system and, no context window overload ever. In the corporate world, such a setup would feel like magic on steroids, like a damn cheat code.

If you're curious, you can go check the pipeline-card

retrain-pipelines generated together with the training of this specific model adapter version here on the Hub.

Multi-adapters single-endpoint server

One thing we didn't mention earlier but which has real importance is that, when during the

SFT training stage the model is provided with "query"/"response" pairs, for the learning to be most effective, those are prepended with a well drafted system prompt. It plays a major role in helping guide the base model into integrating the task-specific expertise right.That means that for inference, it's critical to switch prompt_template when switching between adapters.

You can find the exact prompt_template that goes together with the `retrain-pipelines/function_caller_lora` adapter being depicted in this article by following this GitHub permalink.

As you look into this, it's worth recognizing the special care given to not returning any tool call when none is to legitimately be triggered by a given query.

retrain-pipelines of LitServe by Litghning AI. You can find it in full here. All it takes is a super-easy super-lightweight yaml config file to inform base-model and list of adapters to be loaded (from either the Hub or disk, find a sample here) and voilà.

Consumers of the inference service can query the server on adapters revisions being served via the /adapters endpoint, and from there, build their inference requests. Responses to queries can be requested in batch :

|

func_caller_lora adapter |

curl -X 'POST' \

|

`func_caller_lora` adapter activated, inference server response

`func_caller_lora` adapter activated, inference server response

|

|

no adapter raw base-model |

curl -X 'POST' \

|

no adapter, base-model inference server response

no adapter, base-model inference server response

|

It's easy to see how valuable such setups are. An army of specialized soldiers, right at our fingertip, no context-window overhead, no hardware requirement explosion.

Forward statement

- iterate on data cleaning to ensure only valid SFT records make it through

- use PPO to learn from SFT errors by comparing against ground-truth

- make adapters per domain or department if needed, plug them into the same server

- monitor tool definition drift and retrain periodically

- benchmark inference latency under multi-adapter setups

Those "sample pipelines" delivered together with the retrain-pipelines Apache 2 library are meant to be :

- inspirational for people to see how useful automated retraining can be in scaled-up scenarios,

- educational tools for some and a starting point to quick-start their journey, wherever they may lead them, for many.

The use-case covered in this article is intended to push people into thinking ahead into large scale adaptable corporate agentic systems with large tools-base (soon to be called "normal life stuff"), models adapting as tools change, and retraining not as a luxury but as infrastructure.

In the near future, tools (probably MCP servers or a successor) will be version-controlled, deprecated, upgraded.. all the time. There will soon be MCP-dev folks same as there are today in-house API-dev folks deployed all across organizations worldwide.

This isn’t just about smart models. It’s about building adaptive infrastructure for smart companies.

Let’s build forward.

Also, the real-world scenario wave is gonna catch up on you, unless you start working on your passion project real' early folks.

Also, the real-world scenario wave is gonna catch up on you, unless you start working on your passion project real' early folks.To the moon and beyond is dead. Long live : Go crazy, peops' !